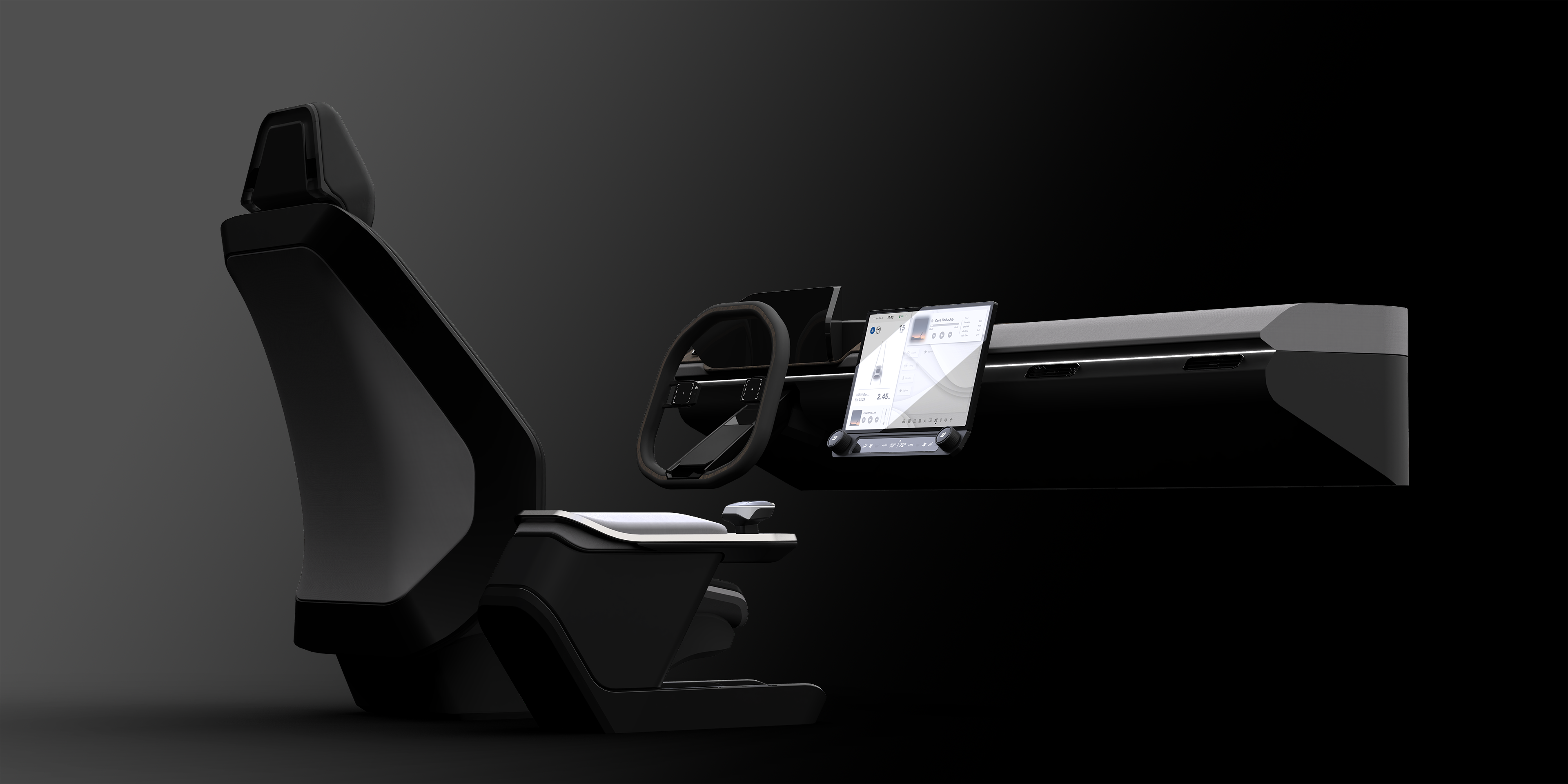

Auto Sphere

L3 / L4 Autonomous Vehicle Interface

Auto Sphere is a human-vehicle interface designed for L3 and L4 driving automation. It enhances the overall driving experience and also enables users to control the infotainment system through the controller.

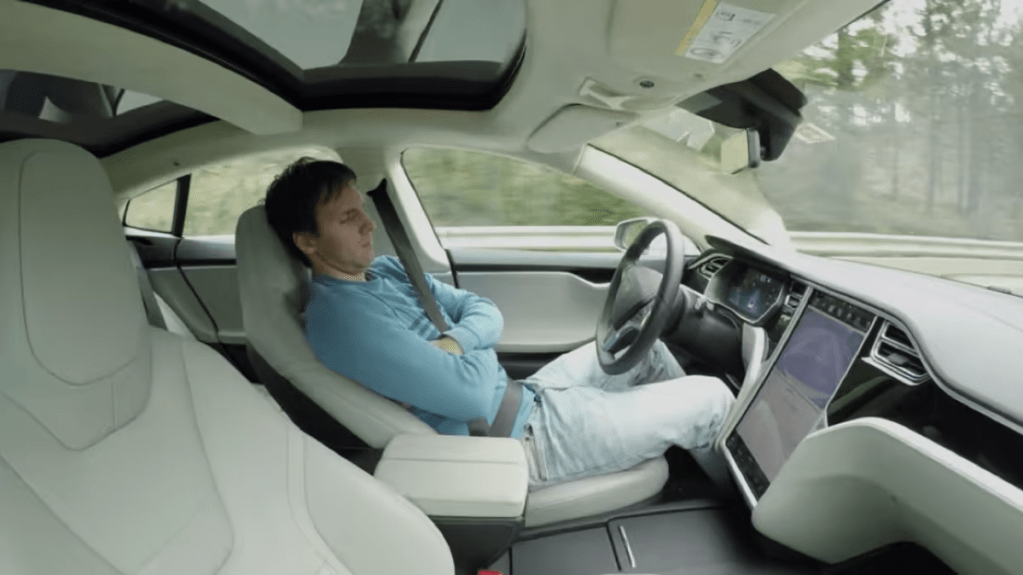

Leaning back in your seat and admiring the breathtaking scenery with your car navigating through the national park on its own, then you decide to pause your car and take a walk, but how?

A New Experience

Auto Sphere features a controller and a user interface that allows drivers to control or change vehicle automation through a simple and intuitive way. It simplifies this decision-making process and provides a seamless user experience.

Let’s dive into the story.

Initial Research

How to deal with those cases when the vehicle is driving on its own?

Case1

You want to pass the front slowly moving truck.

Case2

You’re riding on the highway and the logo sign makes you hungry.

Case3

You’re touring the national park and plan to stop the car and take a walk.

What’s Missing: Not Everything is Planned

After interviewing 5 drivers who had driven long-haul (>3hrs) trips:

- All of 5 took unplanned rest or meals during the trip.

- None of them destined specific place for rest and meals before trips in most cases.

- 4 of 5 had driven in a national park, and all of them took unplanned roadside parking.

What you’re expecting:

The reality:

People’s Expectation

A short survey was conducted to figure out people’s expectation of interaction in future autonomous vehicle.

| Driving Experience | Number of people |

| ≤1 year | 4 |

| >1 year, ≤3 years | 6 |

| >3 year, ≤5 years | 5 |

| >5 years | 11 |

| No Driver License | 1 |

Will you enable vehicle automation?

⬤ Yes, let machine take full control

⬤ Yes, but supervise and intervene

⬤ Only when road condition is clear

⬤ Never

Expectation of infotainment system:

⬤ Larger display, more content

⬤ Display trip related info

⬤ More effective input instead of touching

⬤ Voice command

⬤ Connect and control with smart phone

What will you do?

⬤ Non-Visual Task (Leaning)

⬤ Normal Task (Normal position)

⬤ Productivity (Further from dash)

⬤ Eat

Findings

- Automation information should be accessible to the driver. (20/26)

- Through all level of driving experience, most people will not leave full control to machine, but retain certain control. (24/26)

- A strong need for more effective and clear input than touching. (24/26)

- Non-visual task, normal task, and productivity task are all highly anticipated.

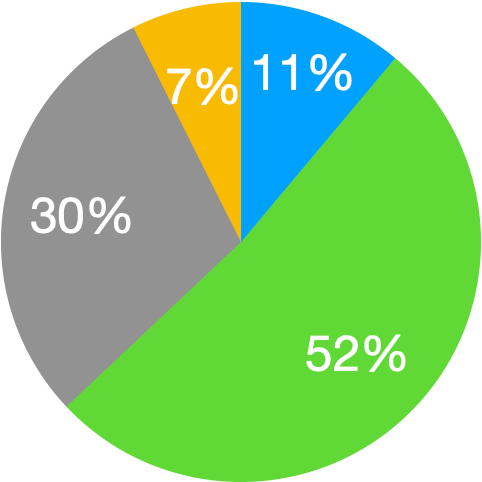

Will you use vehicle automation?

A.Yes, let machine take full control

B.Yes, but supervise and intervene

C.Only when road condition is clear

D.Never

Though self-driving technology frees the driver, we will still view driving-related info and interact with the vehicle during automation.

Many unplanned tasks occurs during the trip. Drivers need to intervene the automation when the steering is out of reach.

As a result, there is a solid demand of an effective interface for driving under vehicle automation.

Design – System

How we be decision maker?

Drivers no longer have to intervene the vehicle’s regular automation, but only need to make decisions.

Then, we need a convenient way to let the vehicle know what we want.

User Behavior:

How we make a decision?

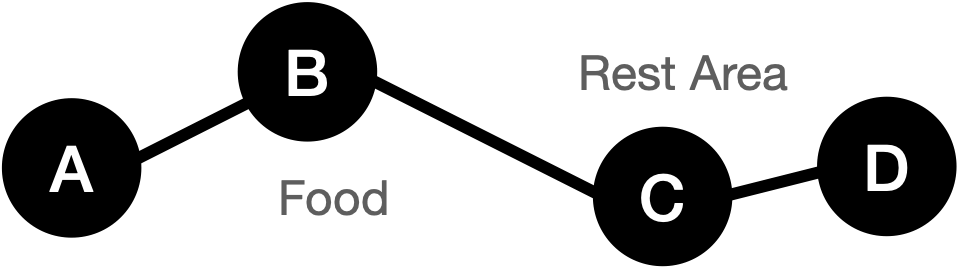

To sum, there are two kind of stimulus lead us to make decision when riding a car:

Inside-out Stimulus

Inside-out stimulus is usually driven by ‘I want to’, it’s a spontaneous behavior from our inside. For example: I go to next rest area because I’m tired.

Outside-in Stimulus

Outside-in stimulus means the stimulus comes from outside. For example: I reroute to the view point because the sign makes me curious.

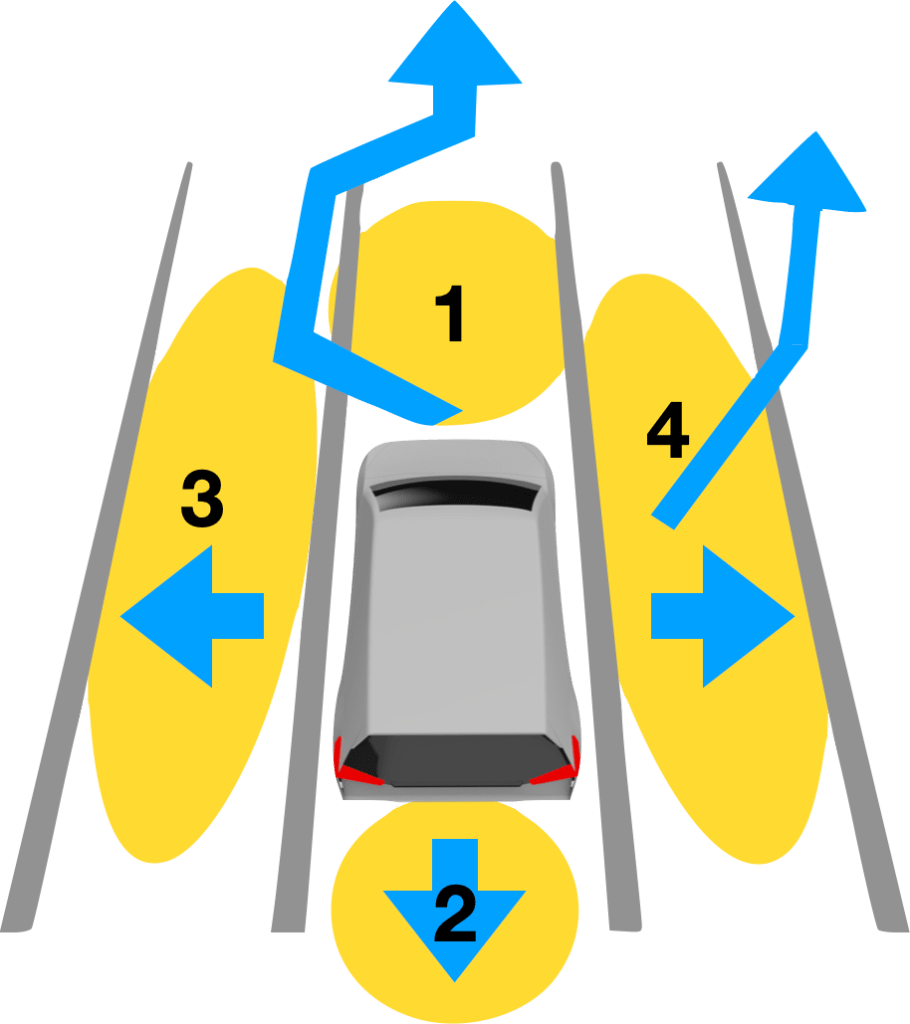

Vehicle Behavior:

Automation Intervene

Typically, drivers intervene the automation when they need to (include but not limited by):

- Pass front vehicle

- Change to a faster lane

- Reroute to a new destination

- Stop roadside

The intervene behavior falls into two categories:

Destination-Related :

Vehicle deviates from planned route and en-route to a new destination.

Non-Deviate:

Actions along the planned route, such as bypassing and roadside parking.

User & Vehicle

The interface should address user’s demands from two stimulus and display feedback. As a result, we need an input device and an information display to support users following tasks:

- Locate a new/adjacent destination

- Input non-deviated moving command

- Provide feedback

| Type | ‘I want to’ | ‘I see’ |

|---|---|---|

| Destination-Related | Search for a new destination | Locate the destination |

| Non-Deviated | Direct Input Command | Direct Input Command |

Input Device

Users use input device to find or locate destination, and tell the vehicle ‘how to move’

Information Display

It displays information and feedback.

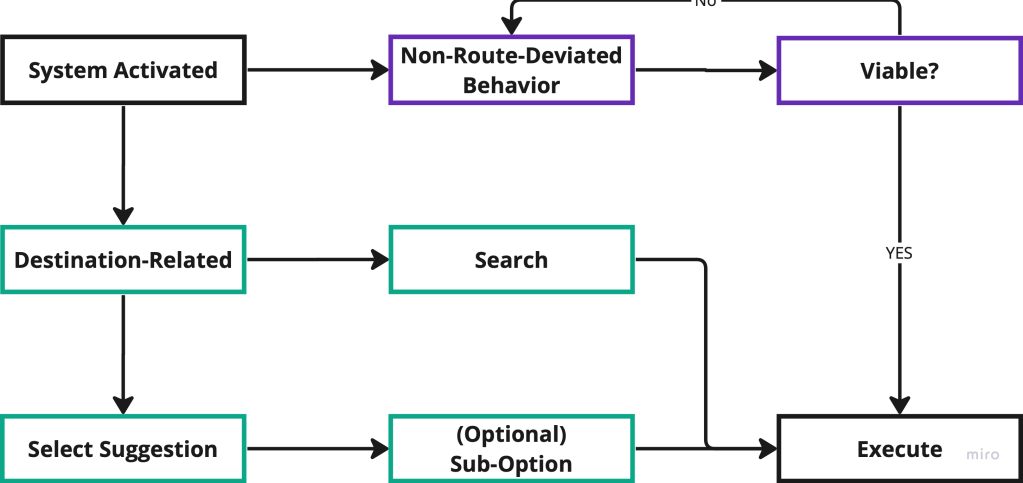

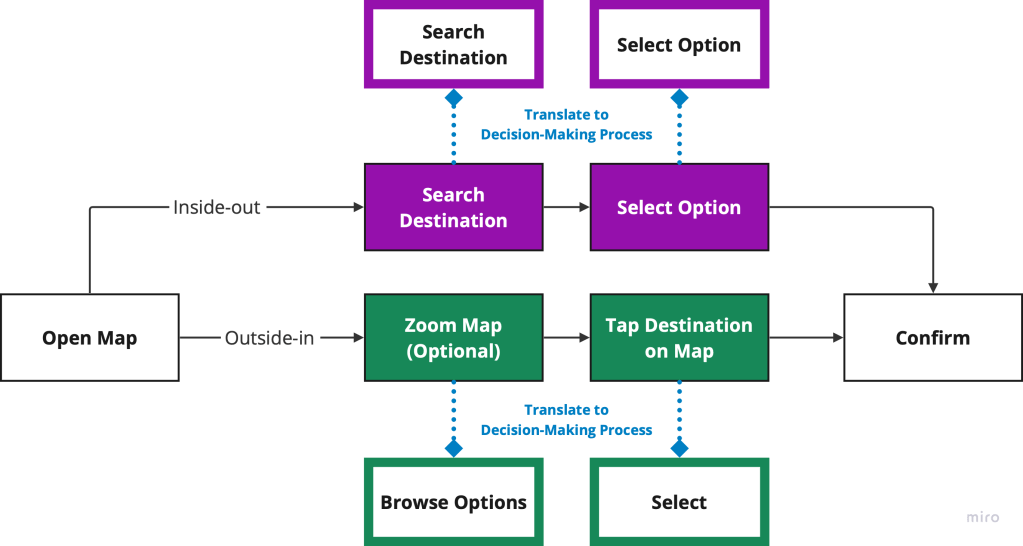

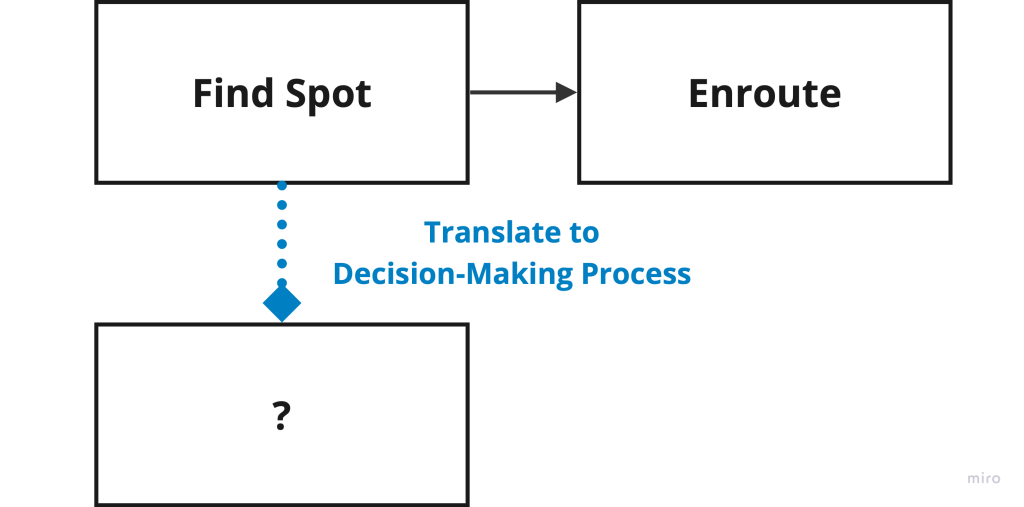

System Workflow

The functioning of the interface varies depending on the type of command it receives.

When the movement is not deviated, the system assesses its feasibility and provides the operator with feedback before executing it.

In case of a destination-related command, the interface enables the operator to either select a new destination based on distance or search for the location directly.

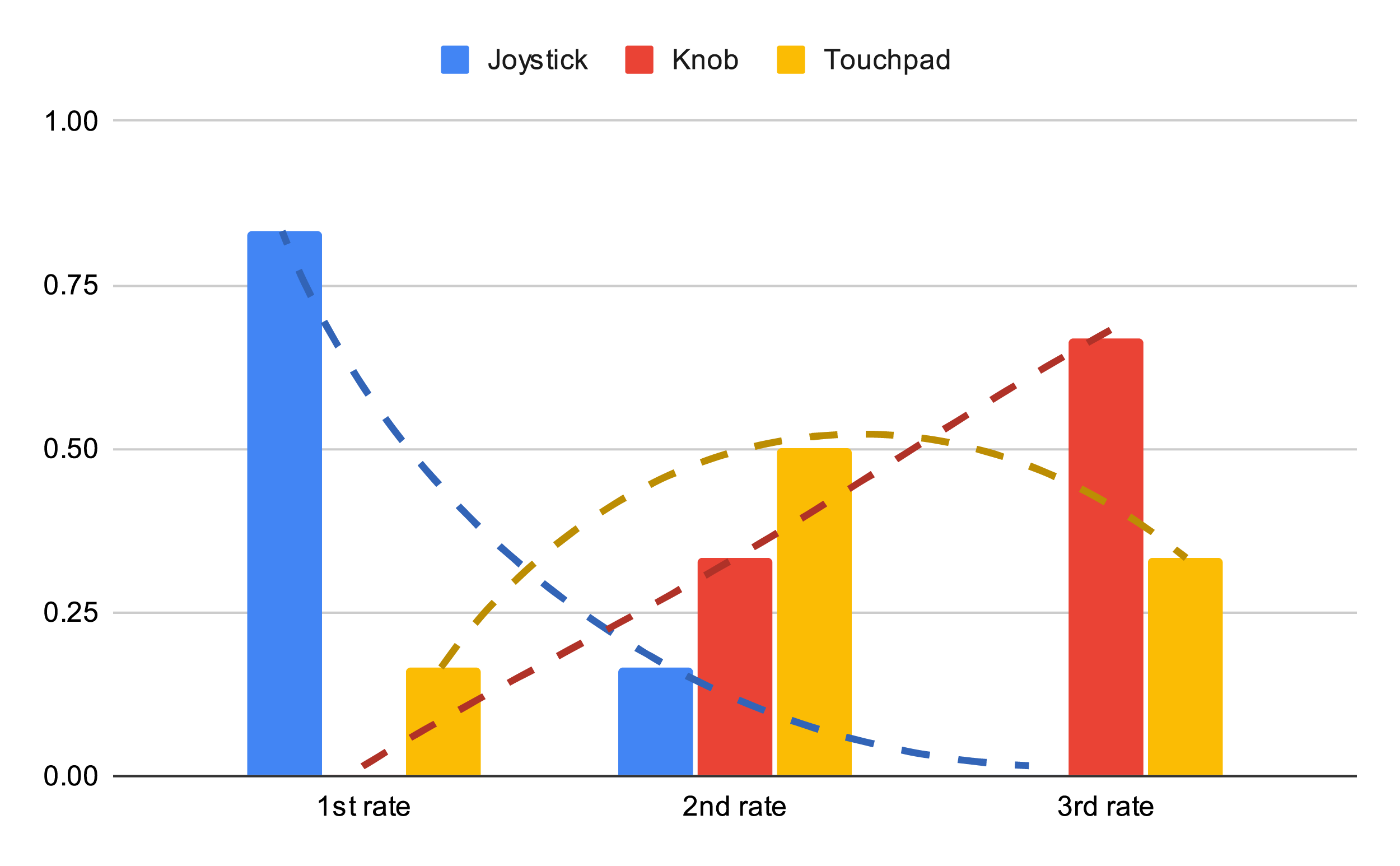

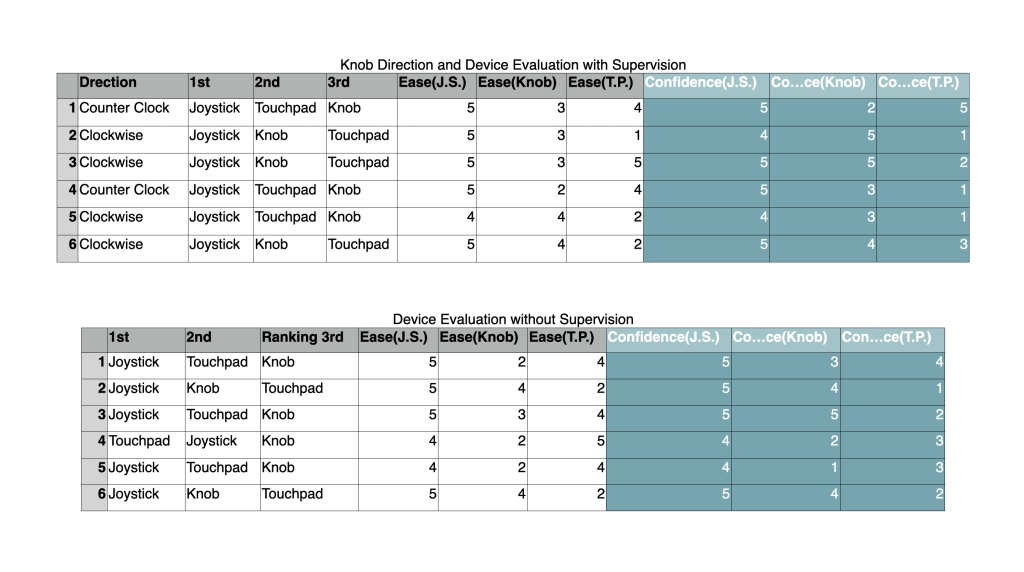

Design – Controller User Study

User Test Design:

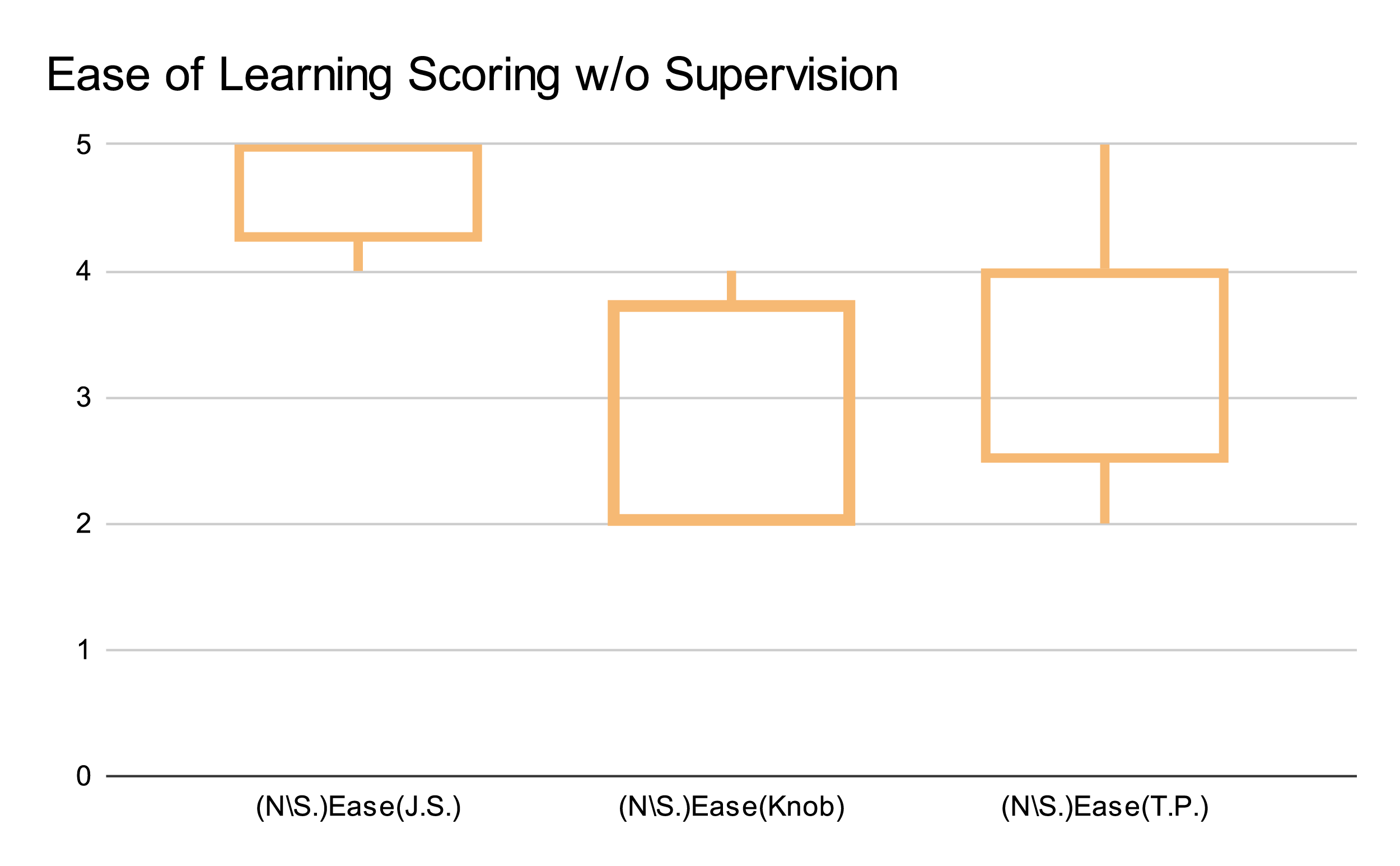

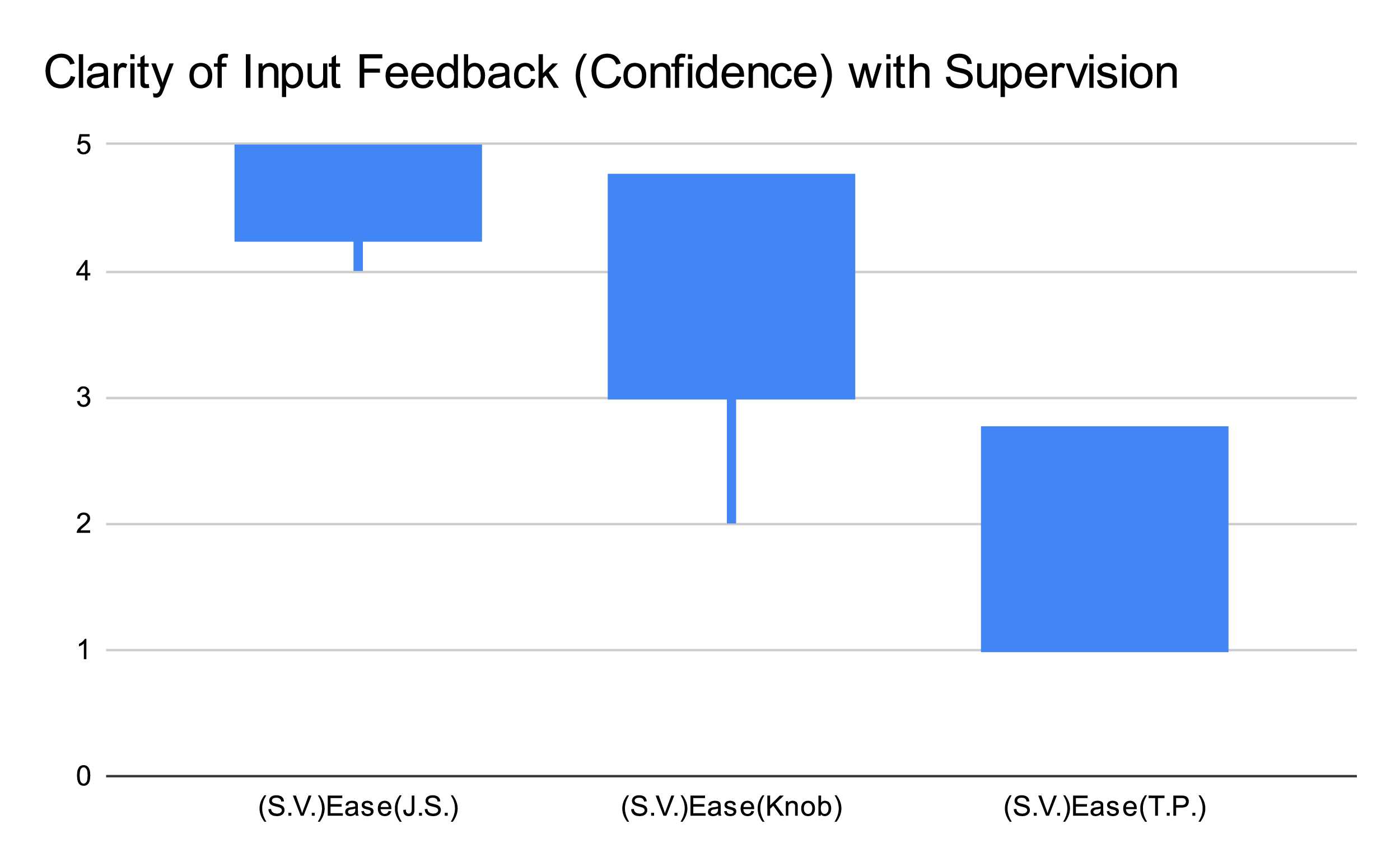

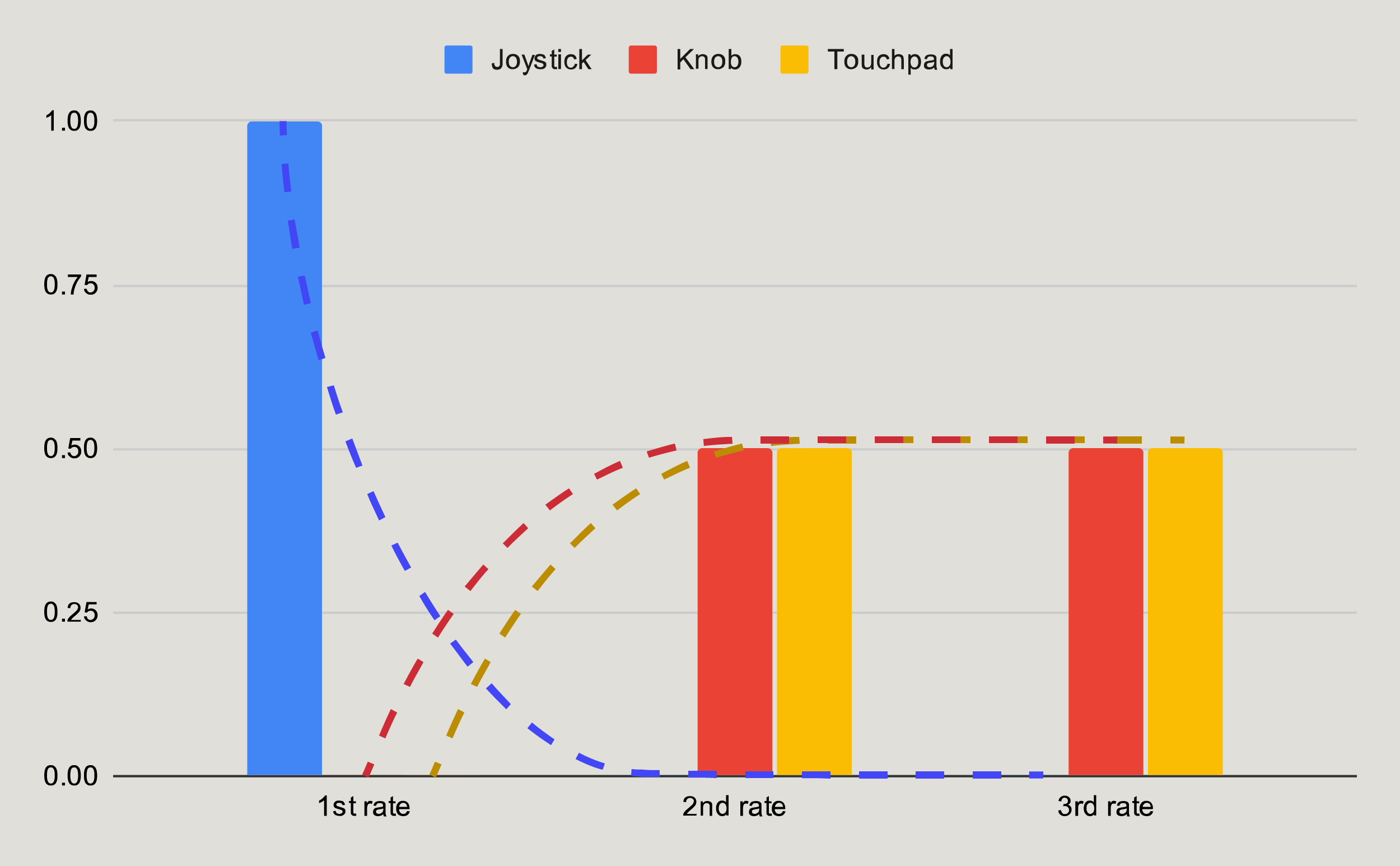

Preference, Learning Cost, and Ease of Use

Three proposed devices: Touchpad, Knob, and Joystick.

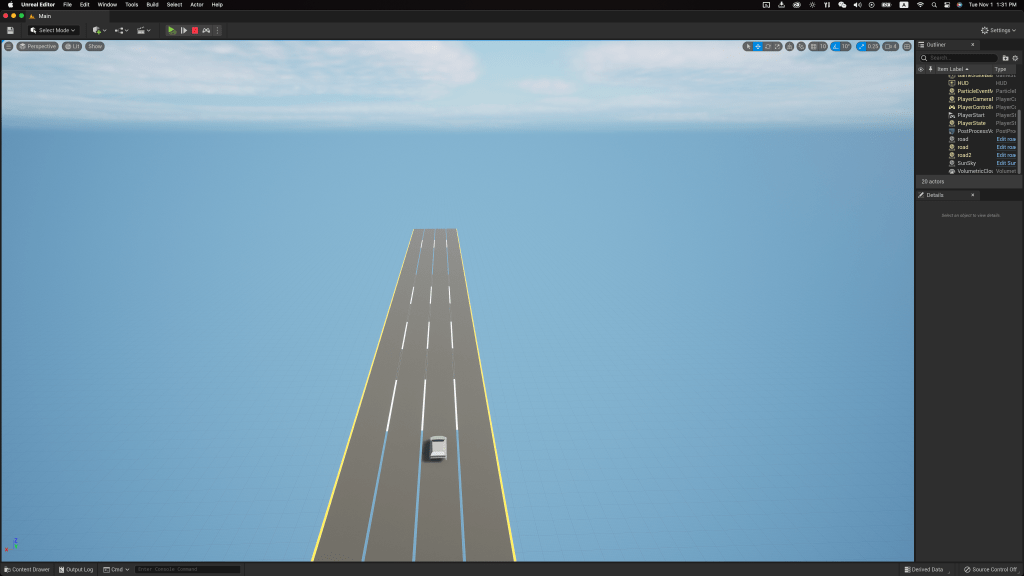

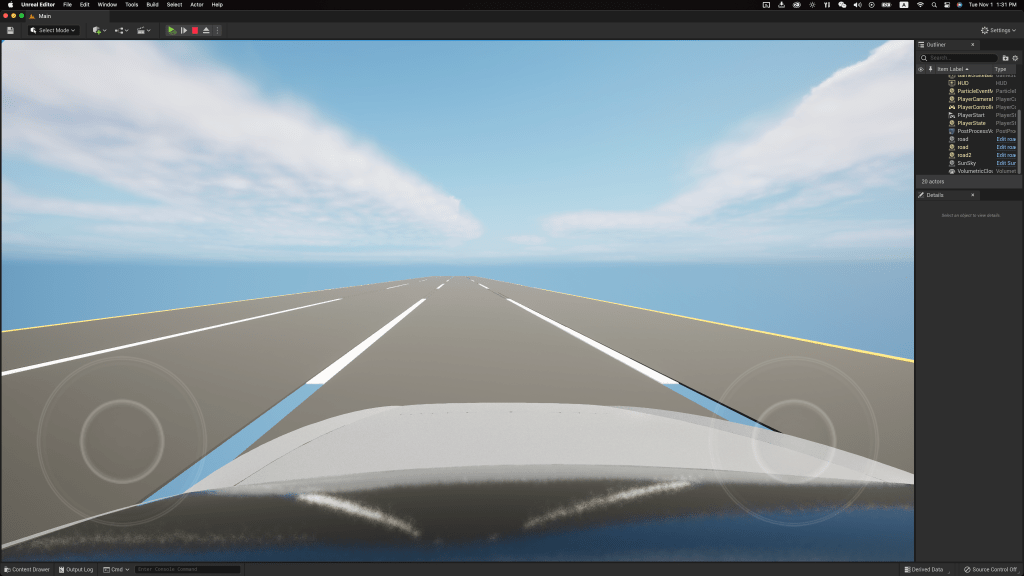

3D printed knob and joystick, attached to the keyboard, along with the touchpad, enabled participants to input commands in the simulated environment built with UE5.

Participants are asked to use each controller for 30 seconds to switch lanes on a four-lanes road in the simulator.

The test is repeated twice: supervising and distracted.

- Supervising condition: testers are asked to supervise the road through whole process

- Distracted condition: testers are free to do non-driving-related tasks

Participants score and rank the experience from three perspectives: ease of use, confidence of the input, and overall ranking, after the test.

User Test – Result

| Supervised | Distracted | |

| Ease (1-5 scale) Ease of learning and using the device, and their intuitiveness. |  |  |

| Confidence (1-5 scale) Clarity of the input feedback beside the vehicle’s movement. |  |  |

| Overall Ranking |  |  |

View Raw Data

The joystick was found to outperform both the knob and touchpad in all tests and evaluations, as indicated by the scores of six participants.

Several issues were spotted when using the knob or the touchpad:

Knob:

- The use of counter/clockwise poses a challenge for certain users, resulting in increased learning costs and a higher risk of potential input errors.

- Half testers found the knob less comfortable to operate than the other two options.

Touchpad:

- When the driver’s position is offset from the touchpad’s centerline, the system is prone to misinterpreting the direction of gestures, resulting in low accuracy.

- Despite the strong vibration feedback when touched, most testers found the touchpad’s physical feedback to be the least perceptible, and they were uncertain about the accuracy of their input.

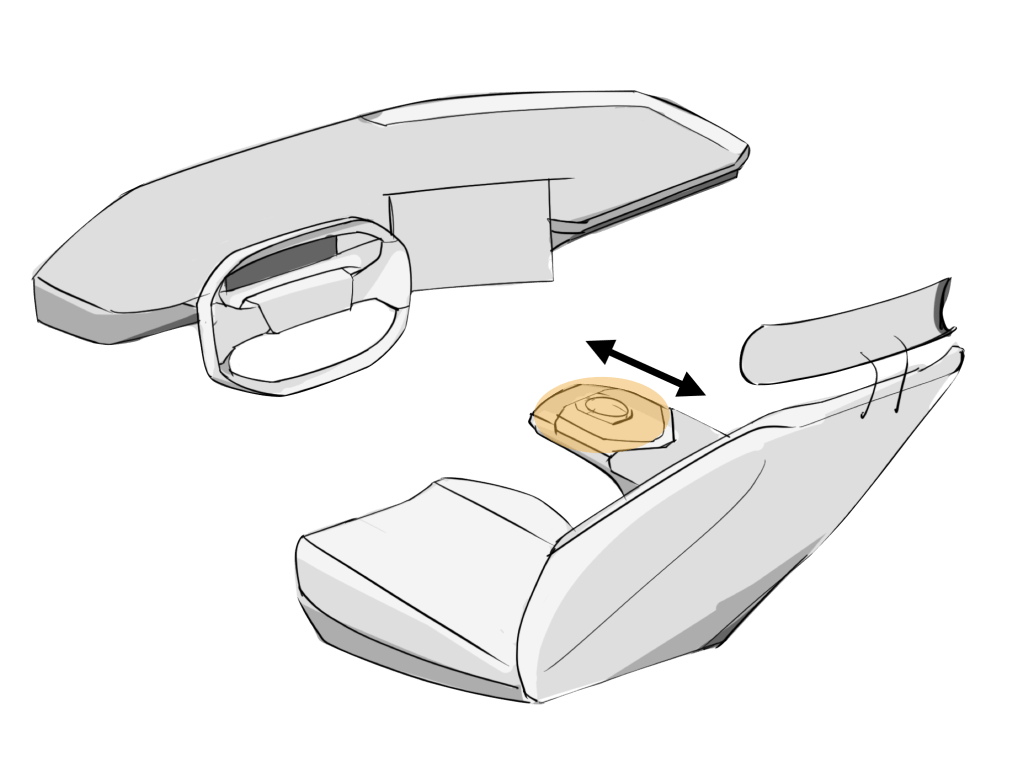

Industrial Design – Joystick

Drawing from prior user research and workflow studies, the controller should facilitate users in performing the following tasks:

- Entering two types of commands: deviated and non-deviated.

- Selecting options and confirming them.

- Navigating back and forth.

Operator is able to input commands comfortably at a wide range of seat’s position.

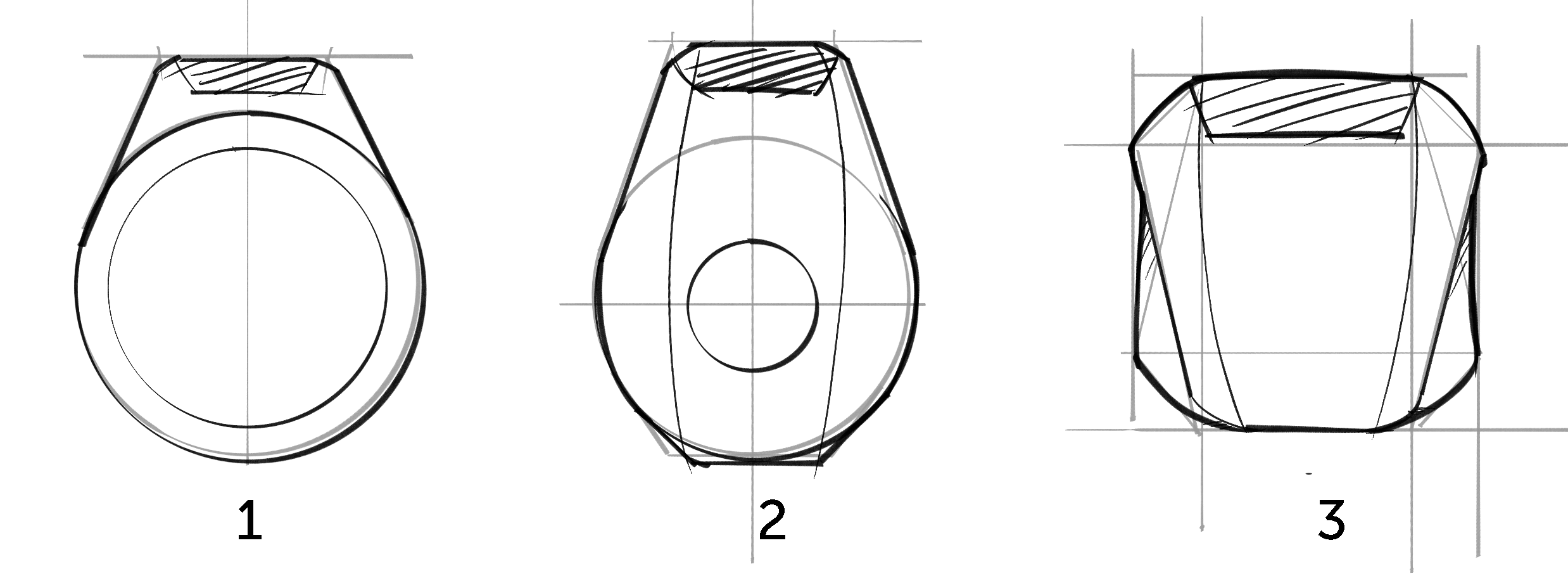

Form & CMF Study

The form design underwent eight iterations to achieve a balance between aesthetics, ergonomics, and functionality.

Three Major Iterations:

Ver 1.0

Initial concept design which integrated a touchscreen interface and a scroll wheel.

Ver 1.1 – 2.0

The ergonomic was enhanced by adding a dedicated Fn key and a indication light, and incorporating two soft ridges onto the surface for a clearer layout.

Ver 3.0+

Shape was overhauled to avoid confusion between joystick and knob. Details were adjusted to improve handling, comfort, and compatibility at each version.

Shape Generation

The raw shape of the joystick is the result of the loft between two opposite trapezoids. The top surface is extruded and the bottom is trimmed to create a smooth, streamlined shape that fits naturally and securely in the palm, allowing for comfortable use.

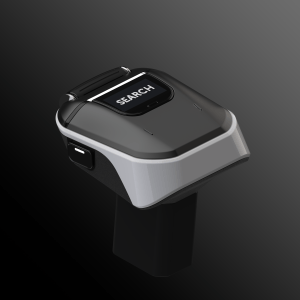

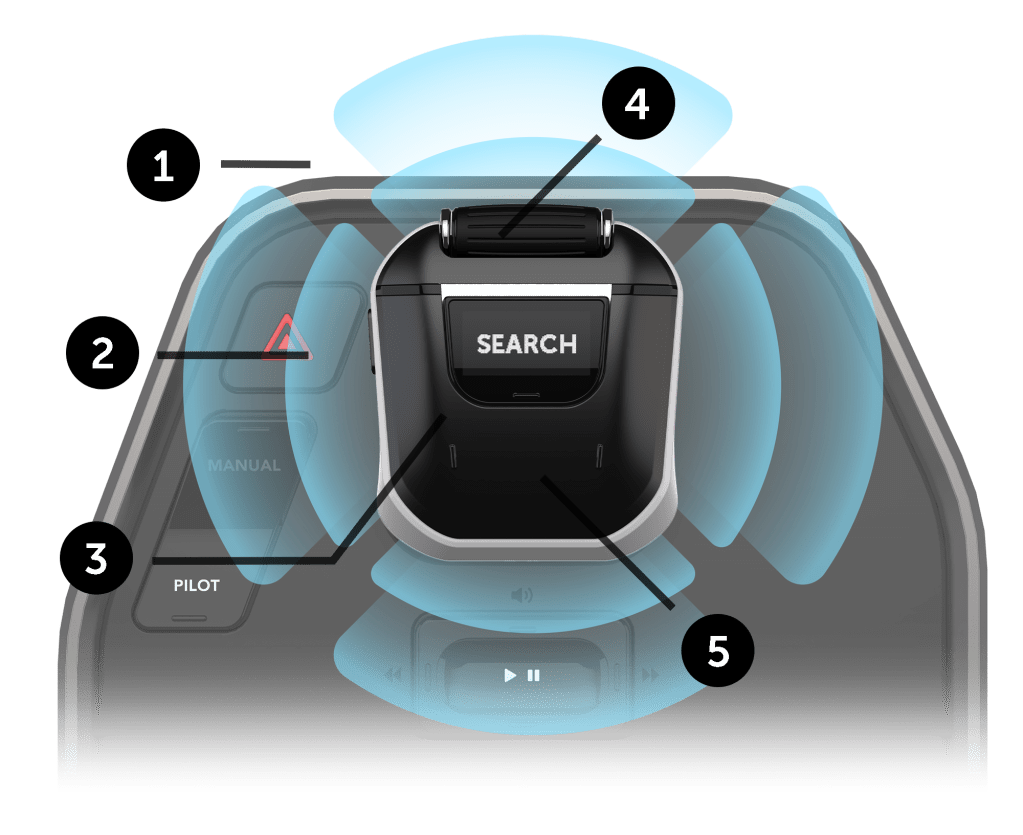

Joystick Controller

The controller is versatile, highly integrated, and fits comfortably in your palm. Users can easily input trip-related commands and interact with the infotainment system.

1. Activation Lock

When the lock is not activated, the joystick becomes fixed, allowing users to control the common infotainment system with the controller.

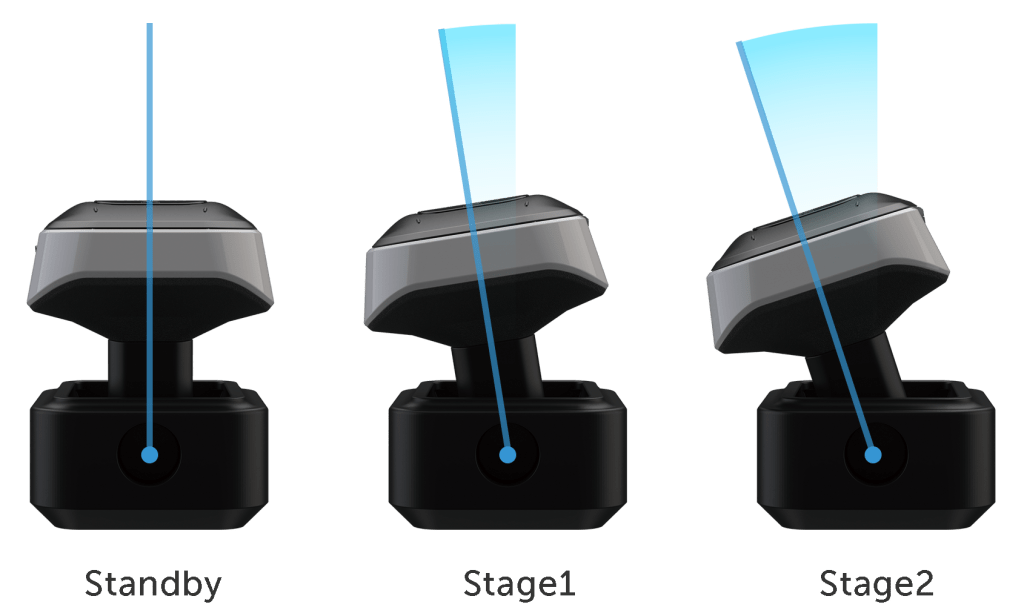

2. Two Travel Stage Joystick

Joystick mode is activated after the fingerprint lock pressed

The joystick features two travel stages:

• First travel stage: instant vehicle behaviors that do not deviate from the planned route,such as changing lanes, decelerating, overtaking, etc.

• Second travel stage: instant instructions related to changing the destination.

3. Adaptive Fn Key

Adaptive function key allows users to input conditional shortcuts or commands. For example:

Search for adjunct destination, or music and video.

Cancel a progressing movement input such as overtake.

Long press to swtich between jobs through app dock.

4. Scroll Wheel

Easily naviagate to option with the scroll wheel. Scroll the wheel to select, and then press the wheel to confirm.

5. Touch Capacitive Pad

The pad is comfortably shaped curved. Swipe left/right to go back/forward.

Design – Graphical User Interface

What information needs displaying?

Based on the previous study, the display should support users doing following tasks:

1. Locate an new/adjunct destination

2. Input non-deviated moving command

3. Provide Feedback

The task is to build a graphical interface clearly visible from distance, and easily navigating with the controller.

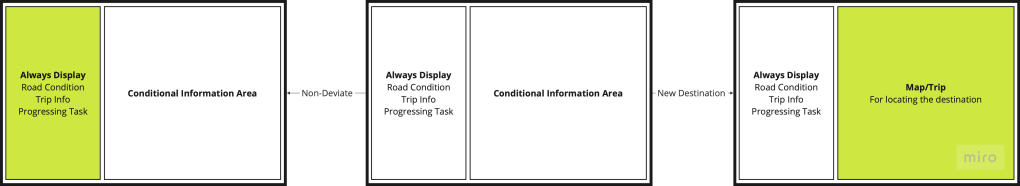

Basic Layout

Screen split into 2 areas: always-on and conditional content

The always-display section presents information that must be constantly visible, such as:

- Road condition for manual driving and non-deviated input

- Trip and time information

- Ongoing task such as system status and media playing.

The conditional section exhibits information that is not necessary to display at all times and is based on user input, for example:

- Map/Trip for editing the trip

- Entertainment Content

Typically, the conditional section is larger to accommodate a wider range of information. However, both sections are adjustable based on the particular input.

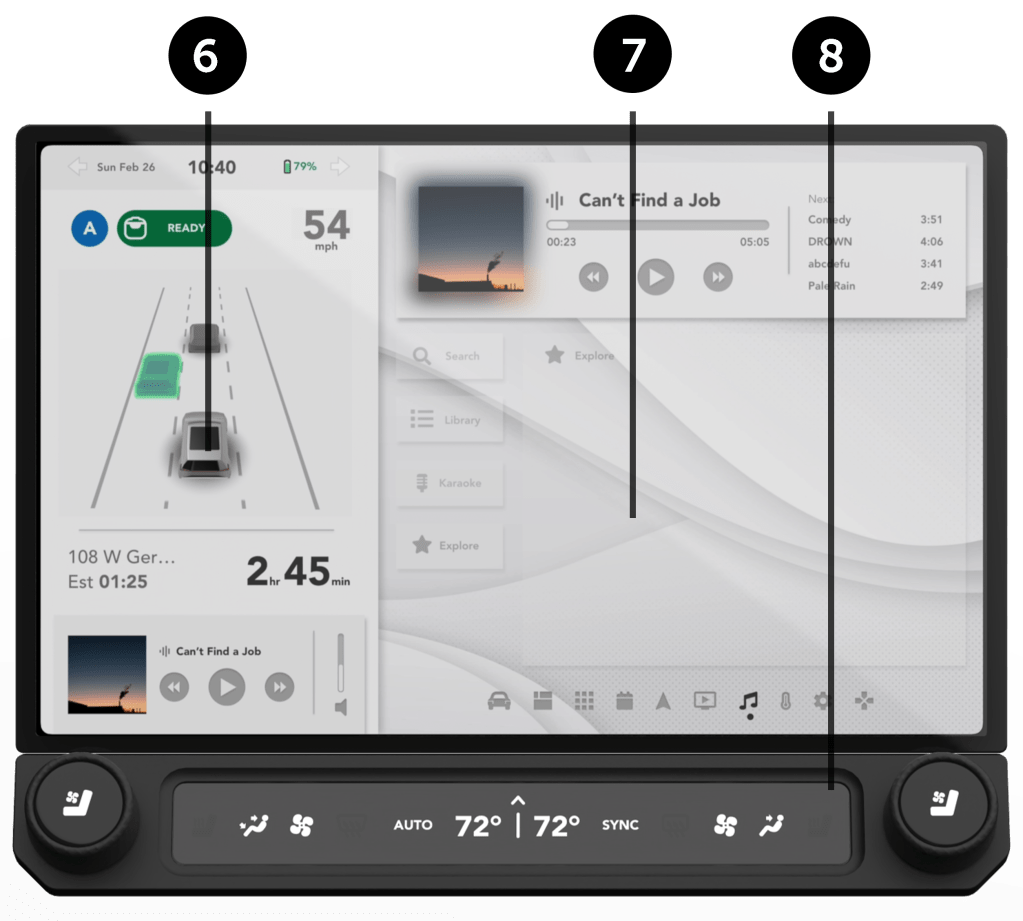

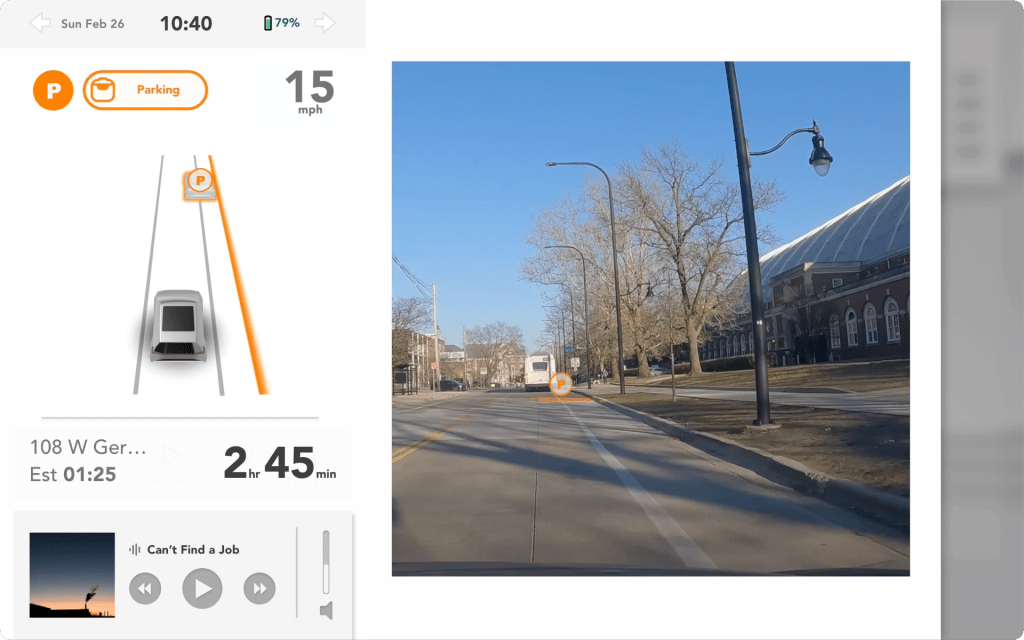

Overview

The interface allows users to see the surrounding road conditions and preview input trip-related commands. It’s designed to be clearly visible from a wide range of distance

6. Road Condition & Command Preview

Users can safely and efficiently execute quick commands while staying informed about road conditions. The interface provides a preview of the instant command. A widget at the top of the window indicates execution status, providing immediate feedback to the user.

7. Apps/Task Area

Components are clearly laid out and easily navigated with the scroll wheel.

To switch between jobs, long press the Fn key and roll out app dock.

8. Comfort Control

Comfort control is seperated from the main display. Users are able to easily read the info and control anytime. Two physical knobs and buttons enable fast adjustment and shortcuts.

Interface Breakdown

Destination-Relate Input

As previously stated, the type of stimulus typically affects a user’s navigation behavior. When translating their actions in a traditional (manual driving) setting to a decision-making system, several modifications will occur:

Outside-In

When the destination is nearby, users may sometimes tap directly on the map in the “I see” scenario.

Inside-Out

If a user intends to search for a destination proactively in the “I want” scenario, the workflow typically remains unchanged from the conventional method.

Nevertheless, geographical factors also restrict the selection of workflows. For instance, if the screen size or map scale limits the location options, users may opt to search for the destination instead of browsing the map. Conversely, if the “I want” option is nearby, users may tap the location directly on the map.

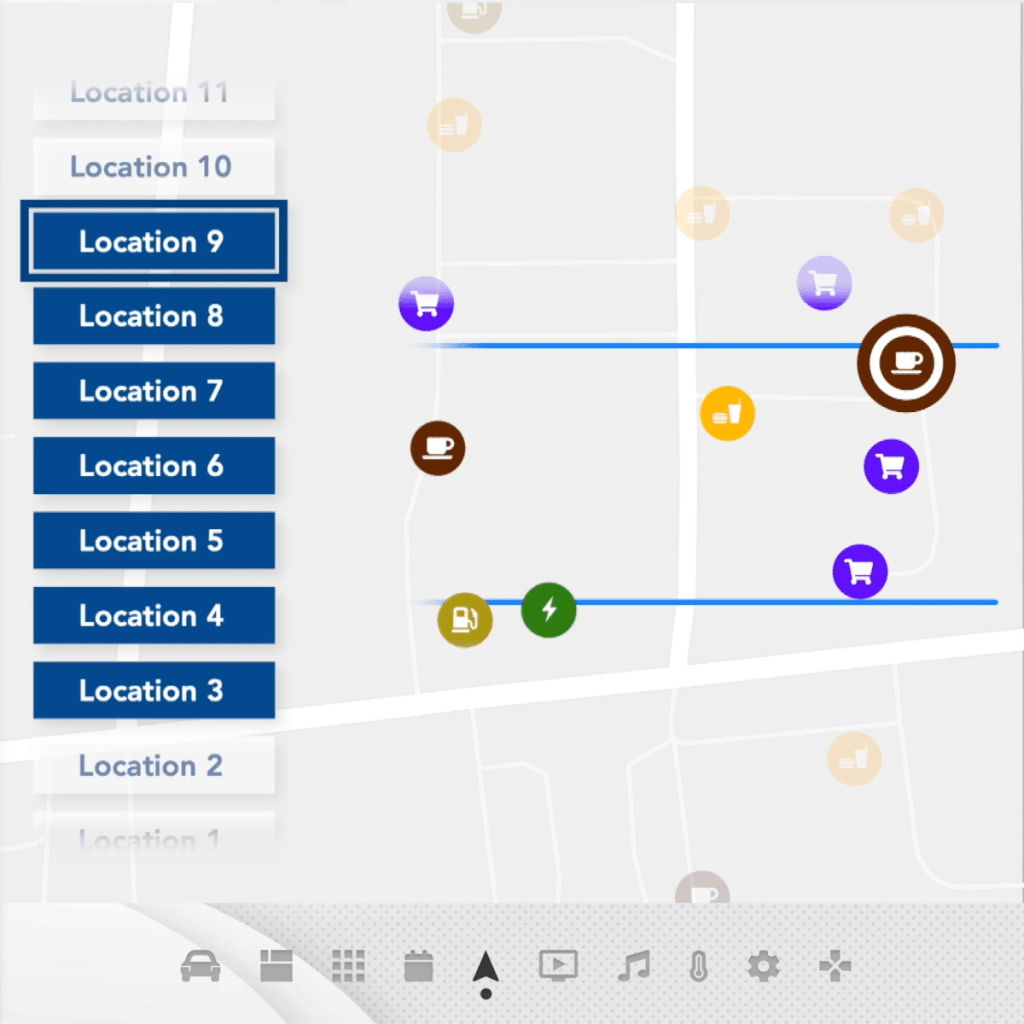

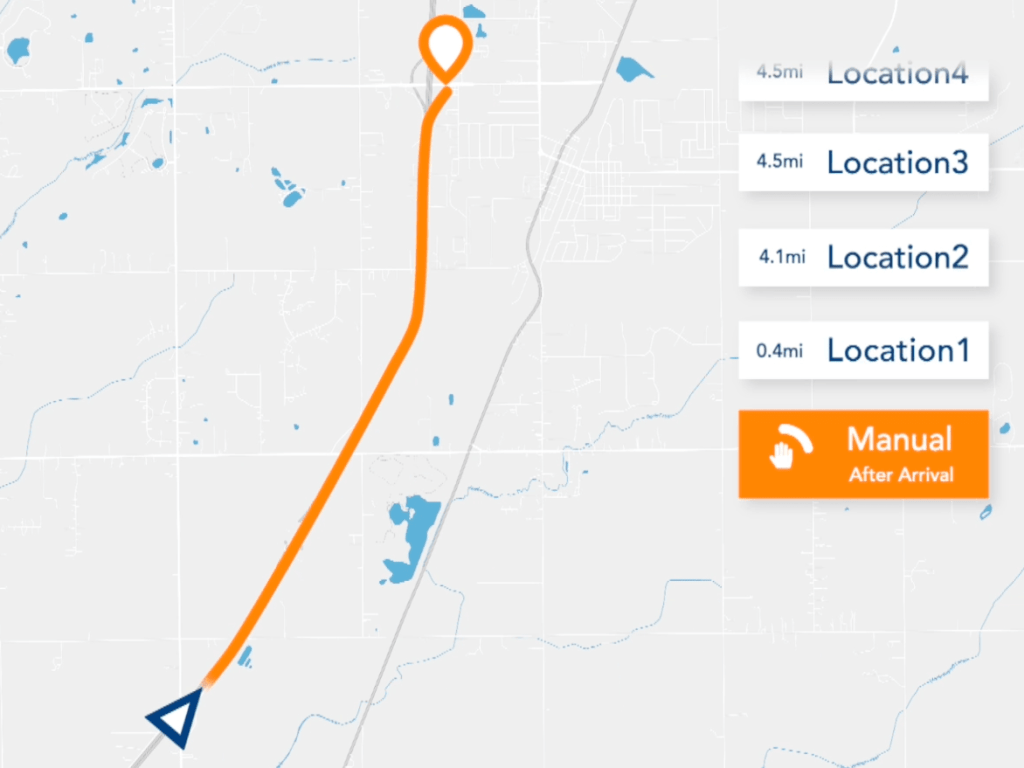

To enable rapid selection of new adjunct destinations, the interface employs a two-step design that follows a hierarchy from zoomed-out to zoomed-in.

The area selector will cover locations on either the right or left, depending on the direction in which the joystick is shifted. Covered location options are also listed beside the map. Users can scroll the selector forwards or backwards to choose areas in front of or already passed.

Once users have selected the desired area, they will then select the appropriate sub-option (if applicable) to confirm the new destination. All of these inputs can be executed using a single scroll wheel.

The UI design varies based on area’s type:

In urban/suburban areas, the area selector directly covers options on the right or left.

When the vehicle is on highway, the area selector is based on Exits.

Users can search for their destination at any time while on the map/trip page.

They can also choose to takeover control after exiting the highway.

Non-Deviate Input

Users do not need to find new destination for non-deviating inputs, but for such inputs that require immediate execution, they often need instant access to road information. Therefore, always-display section is ideal to accommodate the information about non-deviate input.

The visualization of traffic condition and system status frees users from actively checking the traffic and allows them to move vehicles when performing non-driving related tasks (leaning, chatting, working, etc.)

The interface is consist of two sections: system status and road(traffic) condition.

System status includes automation status, command viability, and speed. Input validation is indicated by both color and text.

Similar to the system status section, the road (traffic) section provides users with a visual representation and feedback through animations that vary in color depending on their viability.

Controller Status: Deactivate

Controller Status: Activate

Viable

#178858

Attention

#FF9E3C

Alert

BF2702

Special Condition: Roadside Parking

Roadside parking is an exception to the normal pattern of uninterrupted driving. While the vehicle remains on the planned route, it ceases driving, requiring users to manually choose a parking spot in certain situations.

Such behavior can be a spontaneous decision during a road trip, while dropping off or picking up people, or in emergency situations. Therefore, they require an efficient method to specify the parking location.

The issue lies in how to interact with the system. Unlike specifying a location on the map as a destination-related input, it can be challenging to designate a parking spot on the digital map because:

- Map may not include enough geographical details, especially in rural-like areas.

- Due to map scaling, users may find difficulty interpreting the real-world spot through a scaled digital representation.

The optimal solution for addressing this challenge is to avoid translation altogether. Augmented Reality (AR) technology enables users to directly choose a parking spot by using real-life references. When in roadside parking mode, a larger always-display section allows users to select the parking location through an AR preview window.

Final Outcome & Demo

Auto Sphere

Auto Sphere embodies a human-centered design approach, resulted in its beautifully designed joystick controller and graphical interface. By streamlining the decision-making process, this interface empowers drivers to easily and intuitively control or customize the L3/L4 automation of their vehicle, with a focus on delivering a seamless and user-friendly experience under most conditions.

Watch DEMO

Gallery